Road and network traffic bear lots of similarities. Just like vehicles on the road, network packets can take a wrong turn, or be delayed because of congestion. However, the network can lose packets quite often unlike on the road where vehicles are hardly ever lost. For real-time interactive video, for example a Skype call, it is extremely important that the media arrive on time as it should be played out at the same rate as it was captured and without much buffering delay. In this blog post we’ll discuss how media is compressed, transmitted over the network, and various error resilience mechanisms.

Video transportation, coding and decoding

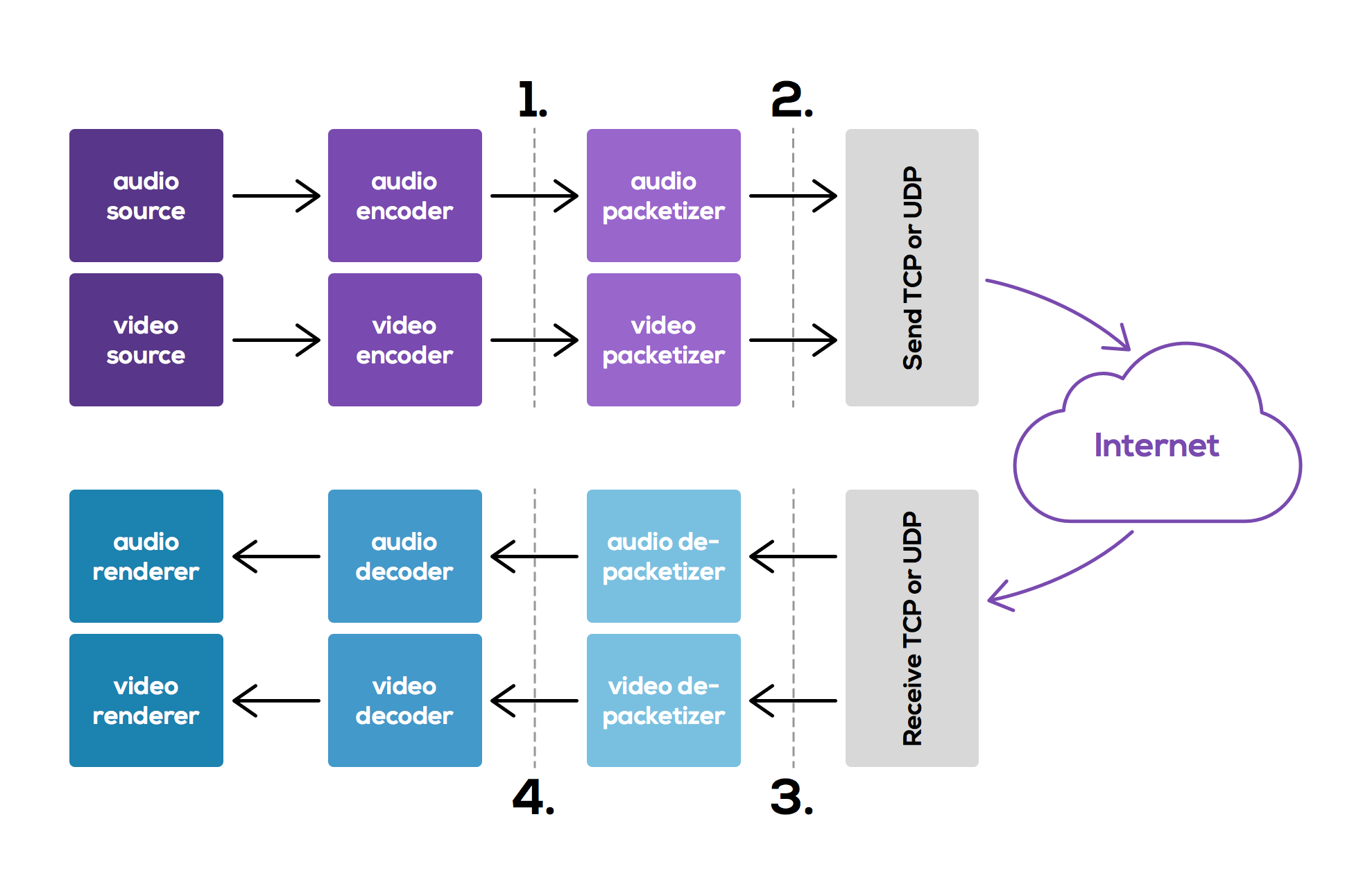

As a quick reminder, before we go into video compression and error resilience mechanics, a quick recap of how video and audio is transported from the sender’s microphone and camera to the receiver’s screen and audio output.

The raw media data is captured at the sender’s endpoint, the frames are encoded with the chosen codec, and sent over the network in packets. At the receiver’s endpoint the data packets are put together into frames. The decoder then decodes those frames into raw media and plays them out.

If some of the packets are lost on the way, the receiving decoder can request retransmission of the packet from the sender, meanwhile waiting for the packet to arrive. This works perfectly well on low latency networks, as the dejitter buffer at the receiver is small enough to preserve interactivity. When the delays are large, the retransmitted packet may arrive late and therefore would rely on more complex forms of error-resilience, e.g., Forward Error Correction, Full-intra requests, etc.

Video compression methods

To be as efficient as possible with video, the video data is compressed using different video codecs. Compression happens per video frame and there are different type of frames that are used to make compression work: Intra-frames (I-frames), Predictive-frames (P-frames) and Bipredictive-frames (B-frames). As B-frames are encoded using both past and future packets, they are not used in real-time interactive video. Hence, in this blogpost we will only explain the basic concepts related to I- and P-frames.

An I-frame contains a full picture (i.e., it is spatially compressed), like a conventional static image file. Therefore, I-frames are independent frames, i.e., they do not depend on another frame for decoding them.

P-frames are dependent frames and contain only the changes in the image from the previous frame (i.e., these frames are temporally compressed). Therefore, P-frames compress much more compared to I-frames, although this depends on the amount of motion between frames (i.e., how much is the delta between frames). Consequently, this reduces the amount of bits needed to transport parts of a video stream. Take for example, the clip below that was recorded at a downhill racing competition. Most of the video stays unchanged throughout the video except for the moving parts, namely the car and spectators, that need to be coded into the P-frame.

I-frames are generated to create a new reference point for P-frames. Sometimes an I-frame is created if the picture changes by too much, i.e., due to panning, scene change, or a lot of motion or when burst loss occurs.

Error Resilience Mechanisms

The IETF has defined error resilience mechanisms that can be used to help resolve issues of lost media data. We’ll go through the various mechanisms defined in rtcweb-rtp-usage: Negative Acknowledgement (NACK), Full Intra Request (FIR), Picture Loss Indication (PLI), and Slice Loss Indication (SLI).

A receiver can signal to the sender that a single packet got lost, or a burst of packets got lost. The sender on receiving this signal would react appropriately. A typical response to these requests is:

- retransmitting an RTP packet from the sender

- in the case of a single loss, a sender can retransmit the requested packet.

- when burst loss occurs or a new participant joins, the receiver may not be able to decode anymore and in which case the sender may choose to send an I-frame. Sending an I-frame creates a burst of packets and is typically used as a last resort.

- repairing it proactively by sending Forward Error Correction (FEC) packets which contains a combination of source RTP packets. We will discuss proactive error-resilience in a future blogpost.

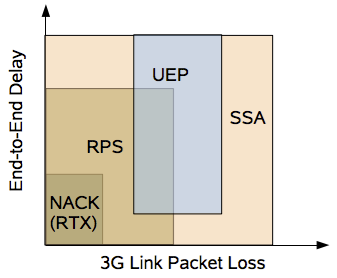

The figure below shows the applicability of some of the error-resilience schemes to real-time interactive video streams.

Applicability of an error-resilience scheme based on the amount of packet loss and link latency. More details in Varun’s Ph.D. thesis.

Negative acknowledgements (NACK)

NACKs are generated by the receiver when, for example, a packet in a multimedia stream is lost (this is a generic mechanism can be applied to both audio and video streams). The sender responds to the NACK by retransmitting the requested packet, if it has the packet in its send cache, and believes that the packet will arrive in time for decoding, given the observed round trip time.

Full Intra Requests (FIR)

When video is sent in a WebRTC session it always starts with an I-frame, and thereafter P-frames are sent. However, when a new participant joins a conference mid-session, it is quite likely that it receives a series of P-frames that it cannot decode without the corresponding I-frame. In this case, the receiving endpoint will request an I-frame by sending a Full Intra Request.

Consequently, in large conferencing platforms, with 100s of participants, that join or re-join the conference at short time intervals, each participant would request I-frames to begin decoding, and depending on the frequency of rejoins, this can cause the sender to generate a lot of I-frames.

Picture Loss Indication (PLI)

The Picture Loss Indication message indicates that the burst loss affected multiple packets belonging to one or more frames. The sender may respond by retransmitting packets or generating a new I-frame. More generally speaking, the PLI can behave both as a NACK and a FIR, hence by using a PLI the receiver offers the sender the flexibility to react to the request in different ways.

Slice Loss Indication (SLI)

The Slice Loss Indication message indicates that the packet loss affects parts of a single frame (i.e., multiple macroblocks). Hence, when a sender receives an SLI message, it can rectify the slices by re-encoding them, and stop the propagation of partial frame decoding errors.

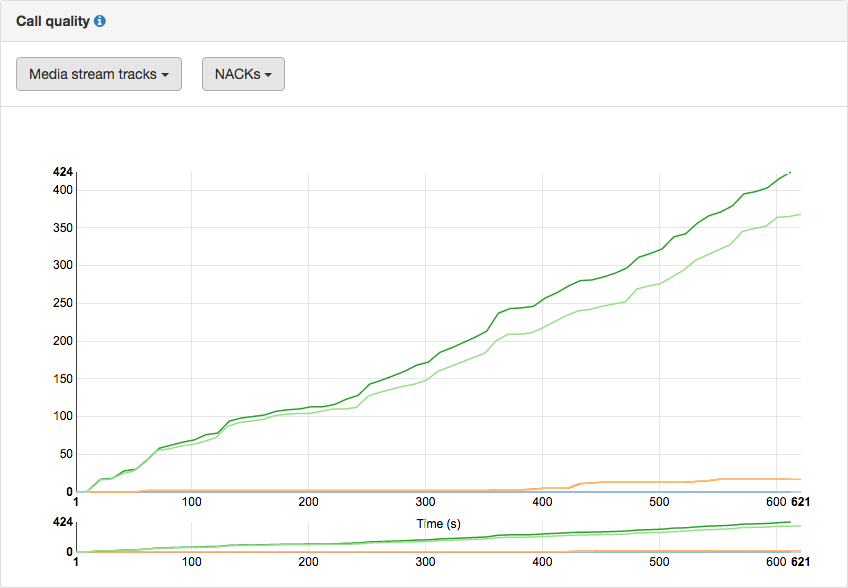

New Graphs on the Dashboard

To help our customers’ observe how well their applications send and receive real-time video, we have added graphs to show the following:

- The frequency of NACKs received by the sender for all media tracks.

- The frequency of FIR/PLI/SLI requests received by the sender for the video tracks.

Some of these metrics are currently only available if the reporting endpoint is the Chrome browser.

A graph of NACKs counted in a WebRTC conference shown in the callstats.io dashboard

Follow us on Twitter to get the latest #WebRTC related news and articles.