The WebRTC specification and the industry as a whole is evolving. The specification has not yet reached version 1.0, however, the companies are quickly growing in numbers. In April 2016 there were about 26 new WebRTC companies appearing every month, and the current count is most likely somewhere over 1 000 companies.

We talk to many companies who build products with WebRTC, and thought it would be useful to share the various divisions of workload and team responsibilities that we see out in the field for WebRTC monitoring. Continue below to see how monitoring workload is divided between customer support, DevOps, and engineering.

Customer support

Customer support process involves:

- Gathering end-user feedback through various channels

- Understanding the exact problem that the customer is facing

- Providing the end-user a possible work-around, solution, or pointing them to the help center

- Sharing the insight with the team internally, with the intention of providing more robust solutions to customers

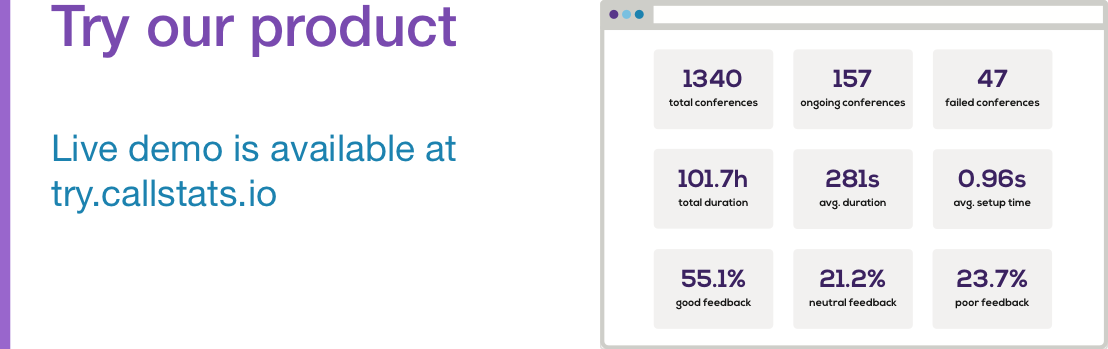

Customer support teams come in various sizes and setups. In smaller startups it’s the founders doing most of the customer support, in a company of over ten people there might be a dedicated support person, and in bigger companies probably a dedicated team. If the company does not use any type of monitoring tools, then customer support is the only way to monitor what is happening and at the same time keep your customers happy.

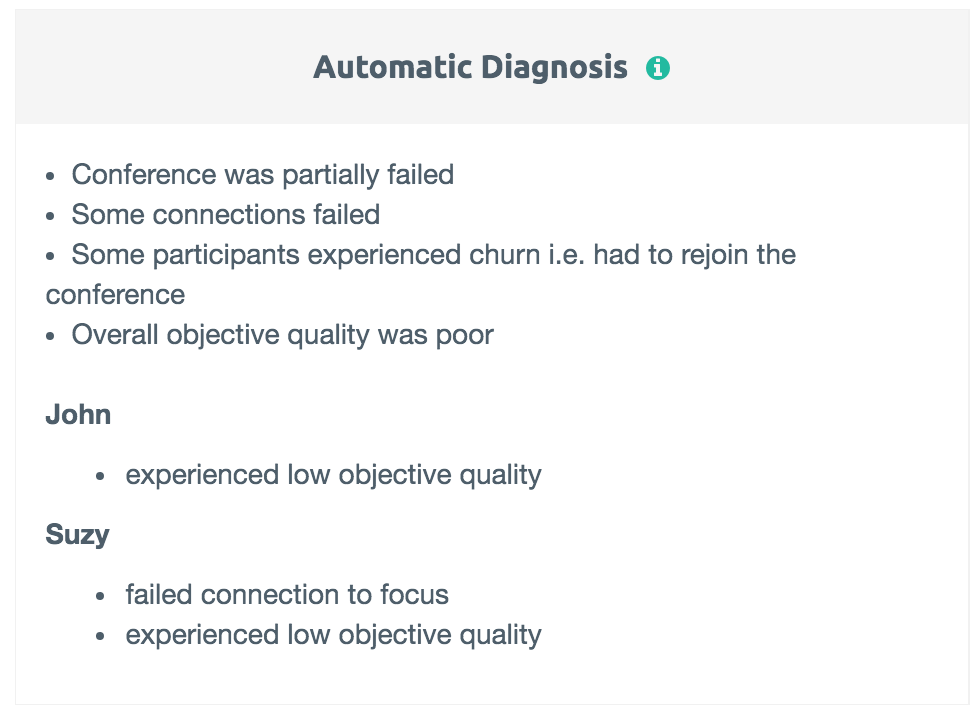

The callstats.io dashboard shows plaing english diagnosis per user to help debug their problems.

Awesome customer support can be a huge asset for a company that keeps customers happy, and it can bring in new customers. However, customer support is mostly about looking into the rearview mirror, and quality assurance should not be left solely to users and customer support. Issues reported by customers need to flow to engineering but the development team needs to carry out its own monitoring as well.

DevOps

The goal of DevOps is to ensure that building, testing, and deploying software can happen rapidly, frequently, and more reliably. Unlike customer support, DevOps operates in a proactive manner trying to prevent issues beforehand rather than fixing them reactively as they appear. To accomplish their goals, the DevOps engineers use a process [1]:

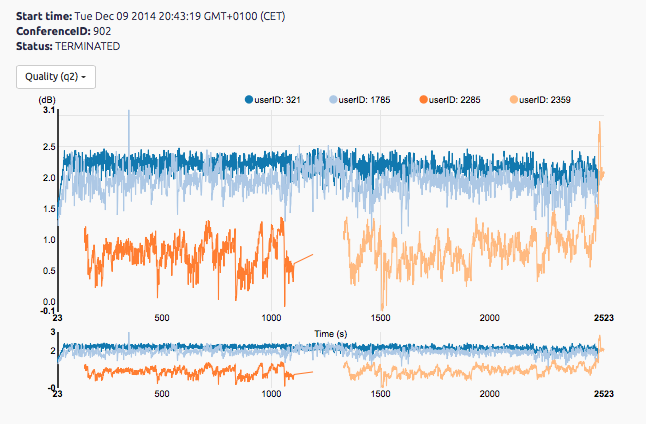

- Measure metrics over a time period

- Visualise those metrics on a dashboard

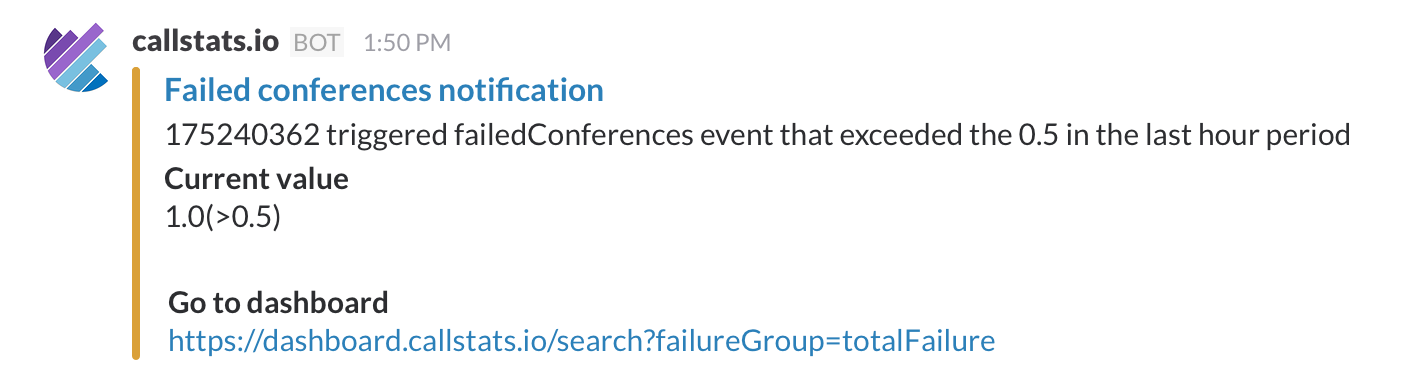

- Trigger alarms, which the DevOps then respond to

One part of the work is to monitor metrics of deployed software, and make sure that the software functions and performs as intended and comparably (if not better) to the previous versions of it. For example, DevOps might be checking that conference failure rate does not increase when a new version is deployed.

A notification sent from callstats.io to Slack.

Consequently, if a fix for conference failure is deployed, measuring an improvement in the failure rate. Of course, failure here is an example, this can be applied to churn, objective and subjective quality, or conference duration. In essence, measuring any conference metric that stunts the growth of the application or web service.

Engineering

If customer support is reactive and DevOps proactive, then engineering’s approach is to be active, as they strive to ensure that each deployed software version has fewer issues than the one before it. However, they rely on the observations and issues reported by the customer support and DevOps.

We believe, like DevOps, engineering should proactively monitor the deployments and look for specific issues related to the features released in the latest deployments, making sure that the features are working as intended.

WebRTC internals on steroids.

Summary

Whilst the WebRTC specification is getting close to v1.0, there are already an increasing number of WebRTC deployments. The testing and monitoring practices for WebRTC products are still taking shape. As WebRTC becomes more mature, we think that the workload will split more evenly between operations and engineering.

Regardless of the complexity of the WebRTC product it is best to use some sort of monitoring tool, and not rely only on customer comments. Figuring out what is causing a problem is a lot easier when a monitoring tool is giving you metrics that can either confirm or deny the customer’s claim, and taking you one step closer to solving the reported issues.

Furthermore, if your monitoring tool collects and analyses data in real-time, you can debug your client’s call when the call is still on-going. At one company, the customer support answers calls made to traditional phone numbers, and time to time they are on the phone debugging a live WebRTC-powered call that is underperforming.

If you have stories or best practices to share regarding WebRTC monitoring and testing, we would love to hear from you at support[a]callstats.io or in the comments below.

[1] In some companies there is a distinction between Site Reliability Engineering (SRE) and DevOps, and this sort of process would fall more on SRE than DevOps in such a company.