Nowadays, the most important metric on the Internet is latency because applications are becoming more interactive. Ergo, the Internet community is continuously working on transport protocols to improve latency. For example, in 2009, as part of the “Make the Web faster” initiative, Google announced an experimental protocol SPDY, an evolution of the HTTP protocol. The main objective they set for the protocol was to improve the page load times by 50% and reduce the deployment complexities while switching to a new application layer protocol.

SPDY offers four main features:

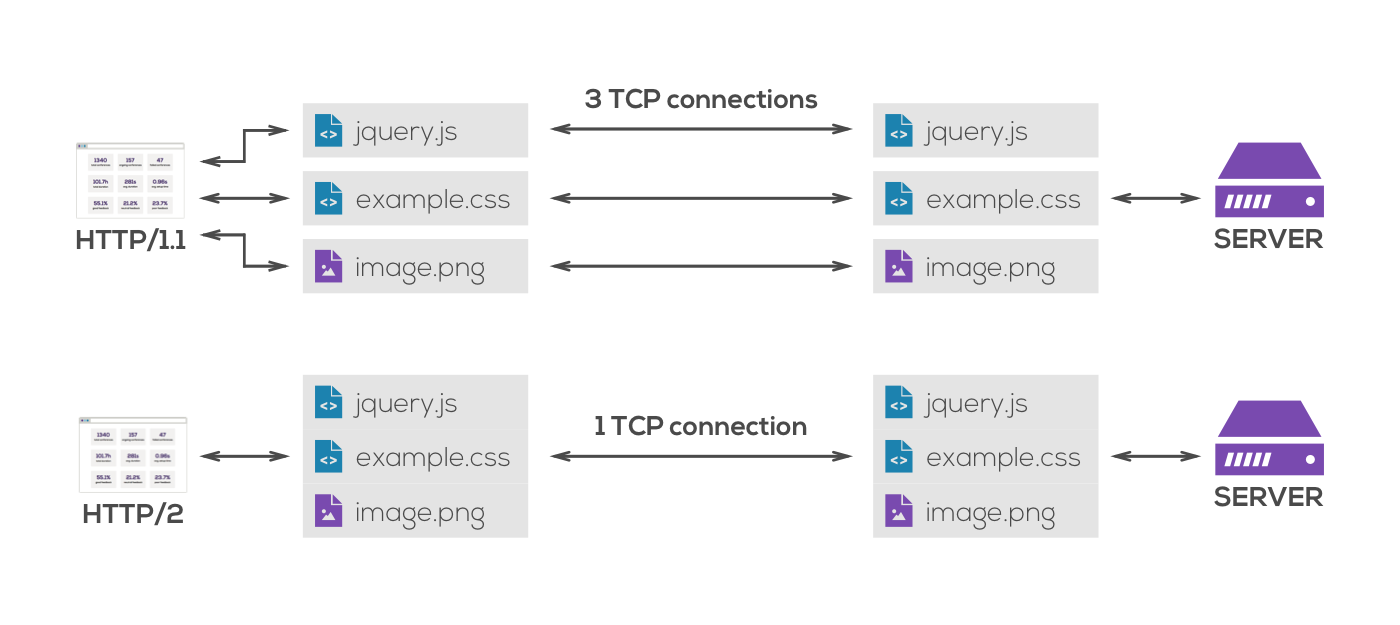

- Multiplexing: SPDY opens only one (or fewer compared to HTTP/1.1) TCP connection per sub-domain and the data stream is multiplexed over a single TCP connection. This avoids the Head of Line (HOL) blocking in resource level, reduces the time to open a new TCP connection, and reduces the impact of the TCP “slow start”.

- Header compression: Sending redundant header information back and forth can be avoided by header compression. The size of request and response headers are reduced significantly by header compression.

- Request prioritization: All the high priority resources are requested earlier than other resources. This would allow web pages to render faster in bandwidth limited scenarios.

- Server Push and Server Hint: Some resources are pushed to the client even before the client requests for them. This can vastly enhance the user experience as essential resources are rendered before requesting them. In case of server hint, some resources are marked with priority by the server and the client can choose to request or not request them based on the bandwidth limitations.

When SPDY showed significant improvements over HTTP/1.1, IETF’s HTTP working group took on the task of standardizing HTTP/2 (H2) to improve the protocol.

HTTP/1.1 and HTTP/2 connections

HTTP/2 is backwards compatible to HTTP/1.1, i.e., if H2 fails, the connection will fallback to HTTP/1.1. Apart from the backward compatibility, H2 aims to use the network resources more efficiently by introducing header field compression (reduced on the wire latency) and allowing the use of multiple concurrent exchanges on the same connection.

Furthermore, H2 uses the generic event delivery mechanism for server push, which is subscription based and the user agent subscribes for different services. The application server pushes messages to subscribed user agents rather than sending unsolicited messages to user agents.

| SPDY | HTTP/2 | Advantages |

|---|---|---|

| Multiplexing | Multiplexing | No HOL blocking |

| Header compression | Header compression | Reduced packet size |

| Request prioritization | Request prioritization | Faster rendering |

| Server push and Server Hint | Server push | Notification |

| – | Binary frames | Faster parsing |

SPDY vs. HTTP/2

The main difference between HTTP/2 and SPDY comes from their header compression algorithms. HTTP/2 uses HPACK algorithm for header compression, which significantly reduces the GET request message sizes compared to SPDY, which uses DEFLATE.

Both, HTTP/2 and SPDY use TCP as the transport layer protocol. TCP’s “slow start” forces applications to open multiple TCP connections to achieve parallelism and higher performance. For example, TCP’s Initial congestion Window (IW) decides the amount of data to be sent in the initial phase of TCP transaction. If the IW value is small then it takes multiple round trip times (RTT)s for the TCP to reach its maximum throughput.

Evolution towards using UDP instead of TCP

Over the last few years, ossification of the protocol stack has been discussed several times – HTTP has become the waist of the Internet – HTTP/TCP/IP is considered the default stack. For some the ossified stack hampers the evolution of the web and Internet, because the applications are more-or-less constrained by the available features. This has lead several organizations to experiment and develop new protocols over UDP. Wide belief within the community now is that moving to UDP will generally accelerate the development and evolution of the transport protocols because the userspace implementation of the new protocol will aid in rapid deployment.

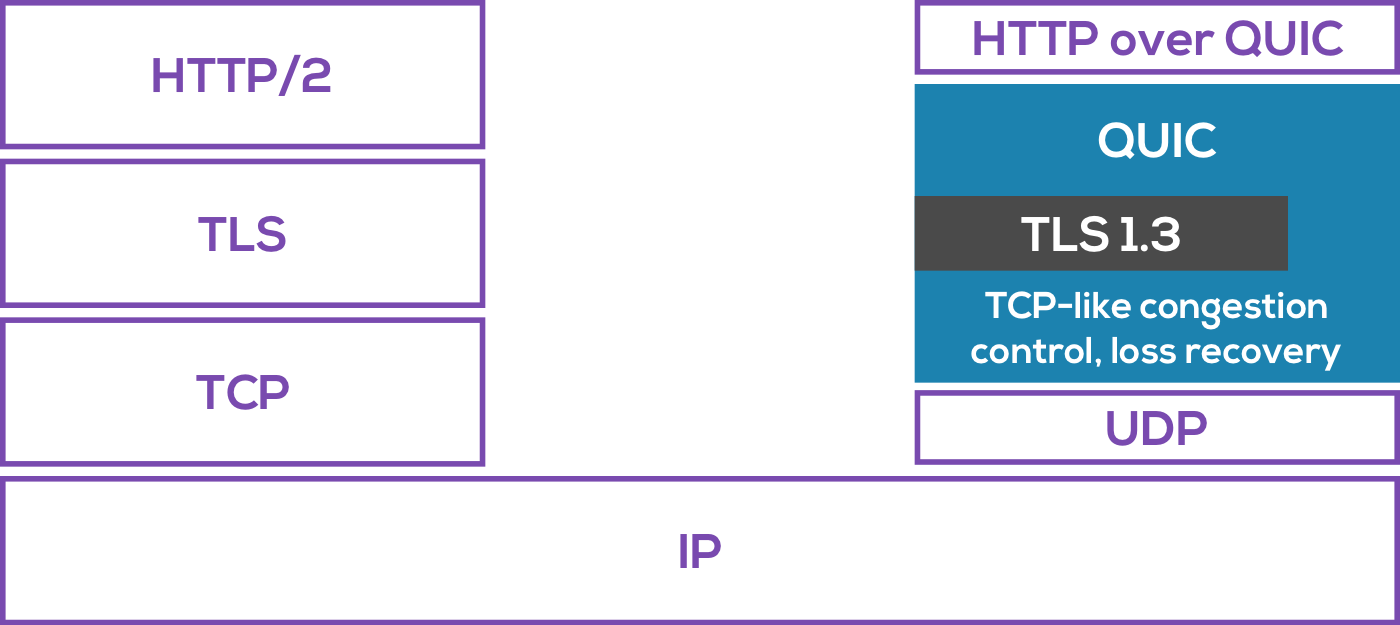

Wide belief within the community now is, moving to UDP will generally accelerate the development and evolution of the transport protocols because the userspace implementation of the new protocol will aid in rapid deployment. Evolvability in the short-term will lead to lower connection establishment times, perform better flow control (at the stream and connection level), in general, leverage the best practices learnt and developed over the last two decades (experimentation on TCP). QUIC (Quick UDP Internet Connection) is proposed as a new transport for HTTP/2 built on top of UDP. Jana Lyenger’s slide-deck from the IETF 96, Berlin, highlights the design aspirations and gains of QUIC, which uses UDP.

Current proposal of QUIC at the IETF from Jana Lyenger’s presentation

Initial results from Google, Akamai, and Microsoft are promising. Early results from Google are promising, 93% of the connections were set up with QUIC without falling back. The same report shows, a 5% reduction in page loading times and a 30% reduction in re-buffering events on Youtube. Akamai and Microsoft have also indicated promising results. QUIC was recently chartered as an IETF working group and work is underway to spec it.

HTTP/2 at callstats.io

Our new data collection REST API uses HTTP/2 for transport. We started transitioning away from WebSockets last summer and our initial experiments were promising. The new data collectors are written in Go and make use of the default H2 server. However, there is still one remaining issue that requires a bit of monkey patching, and has delayed the roll-out. At the time of writing this blog post, WriteTimeout is disabled, but it will be fixed in Go v1.9 Apart from this, we fine tuned our security settings based on this blog post. Now that the Go v1.8 has been released, we are all set to go.

If you are a golang enthusiast, apply for our server engineering team, we are looking to hire 2-3 more developers.