Latency is currently the most important metric on the Internet. At the user level, latency is a monumental annoyance. We have mentioned before, the Internet community is continuously working on transport protocols that improve latency. QUIC is among the most promising of these explored protocols.

Short for Quick UDP Internet Connection, QUIC was initially developed by Google as an alternative transport protocol to shorten the time it takes to set up a connection. Google wanted to take benefits of the work done with SPDY, another protocol developed by Google that became the basis for the HTTP/2 standard, into a transport protocol with faster connection setup time and built-in security. HTTP/2 over TCP multiplexes and pipelines requests over one connection but a single packet loss and retransmission packet causes Head-of-Line Blocking (HOLB) for the resources that were being downloaded in parallel. QUIC overcomes the shortcomings of multiplexed streams by removing HOLB. QUIC was created with HTTP/2 as the primary application protocol and optimizes HTTP/2 semantics.

What makes QUIC interesting is that it is built on top of UDP rather than TCP. As such, the time to get a secure connection running is shorter using QUIC because packet loss in a particular stream does not affect the other streams on the connection. This results in successfully retrieving multiple objects in parallel, even when some packets are lost on a different stream. Since QUIC is implemented in the userspace compared to TCP, which is implemented in the kernel, QUIC allows developers the flexibility of improving congestion control over time, since it can be optimized and better replaced compared to kernel upgrades (for example, apps and browsers update more often than OS updates).

QUIC vs TCP

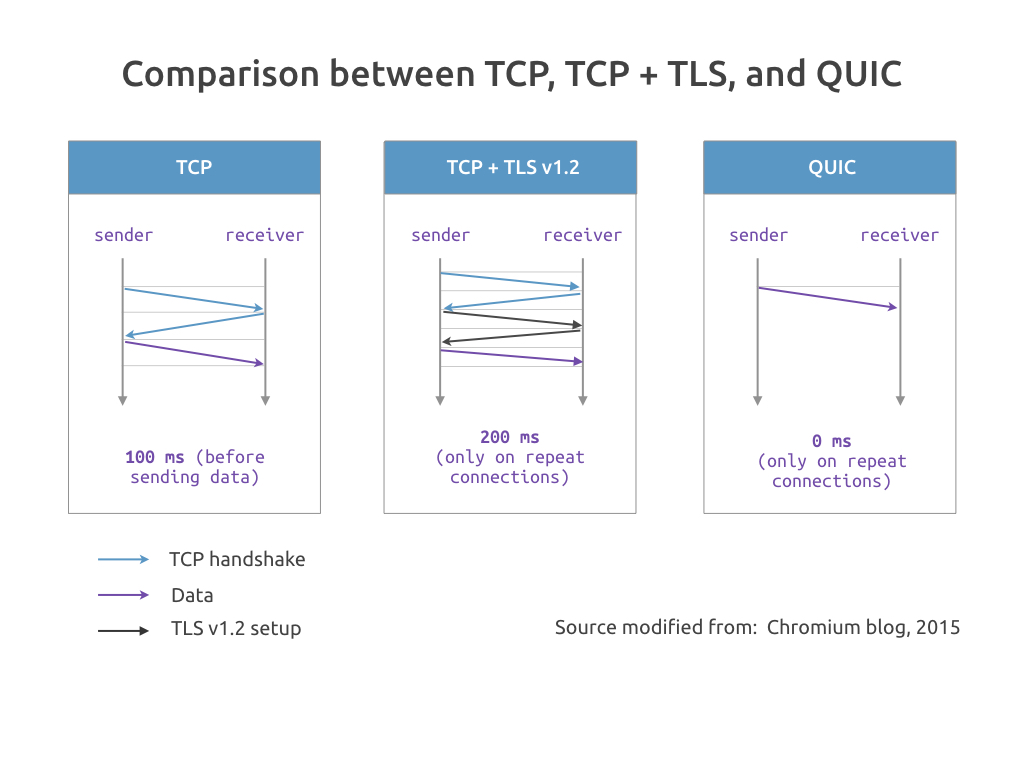

The Internet was built over TCP largely because it is a reliable transmission protocol. But where TCP shines in reliability, it dims in the number of round trips required to establish a secure connection. A secure connection is needed before a browser can request a web page. Secure web browsing usually involves communicating over TCP, plus negotiating TLS to create an encrypted https connection. This approach generally requires at least two to three round trips – packets being sent back and forth – with the server to establish a secure connection. Each round trip increases the latency to any new connection. And web browser establish many parallel connections even to the same server: web pages easily comprise of a hundred or more objects, loading these in parallel without HOLB and within one security context reduces the page load time.

When packets are sent over UDP, we hope that they will make it to their destination, however the remote endpoint does not send an acknowledgement on receiving the packet, that process needs to be handled by the application as per it needs (i.e., does it need all the packets, or can it tolerate some packet loss). This is one of the reasons that motivated the design of QUIC: to reduce the number of round trips needed to establish and maintain a connection between communicating endpoints. With QUIC built on top of UDP, it is possible for a browser to start a connection and immediately start sending data without any acknowledgement from the remote side (0-RTT set up), saving round trips necessary, although this is only possible if the endpoint is talking to a known server. This reduction in round trips makes web pages load significantly faster. If, however, the communication is with an unknown server, both TCP and QUIC take about the same amount of time to establish a connection. For more details, read the following blogposts on QUIC: https://www.theregister.co.uk/2015/04/17/google_quic_test_results/, https://www.slashgear.com/googles-quic-protocol-talks-udp-for-a-faster-internet-20379814/

Fewer Packets, Lower Latency

QUIC also essentially eliminates head-of-line blocking. As the name suggests, head-of-line blocking happens when the delay in receiving a single packet holds up the entire line of packets behind it. In TCP, head-of-line blocking can be compounded because the order in which packets are processed matters. If a packet is lost on its way to the server, for example, it has to be retransmitted. The TCP connection must wait on the recovered packet before it can continue processing any other packets.

In contrast, UDP is not dependent on the order in which packets are received. QUIC exploits this property and layers a flexible stream multiplexing on top, in which only the contents of each individual streams is ordered. If a packet of a stream is lost during transit, only one resource (a file transmitted over that stream, for example) is paused; the entire connection is not blocked.

As noted in our blog dated February 17, initial results of QUIC deployments by Google, Akamai, and Microsoft are promising. QUIC is by default enabled in the Chrome browser, and according to Google, about half of all requests from Chrome to Google web servers happen over QUIC. Google owned websites such as Google Search and YouTube also support QUIC traffic. Google has measured a 3% improvement in mean page load time with Google Search. Additionally, Google reported that the gains are especially apparent on YouTube, where users experienced 30% fewer rebuffering events (video pauses) when consuming content over QUIC.

Space to Innovate

As we know, all applications are increasingly getting more interactive and mobile, which means that the performance benefits of a protocol such as QUIC simply cannot be ignored. Shifting to QUIC means that end-users will enjoy faster page loads and less buffering in video.

QUIC brings many benefits to the Internet community at large. Within the community, there is a considerable consensus that moving to UDP will accelerate the evolution of transport protocols because the user-space implementation of the new protocol promotes rapid deployment and flexibility, leading to increased innovation. Examples for techniques being tested in QUIC include bandwidth estimation and packet pacing to reduce congestion and using FEC to cut a large portion of retransmission-induced latency. There and further techniques are expected to evolve over time, making user space stacks essential for smooth adoption.

TCP is implemented in the OS kernel and users do not update their OS kernel very frequently, consequently, this limits the frequency with which new changes or updates can be made to the TCP stack on an end-user’s endpoint. Consequently, QUIC is implemented in the user-space, and for example in browsers, which update themselves every 6 weeks. Ergo, evolutionary changes in TCP take years. QUIC, on the other hand, can evolve in weeks or months. Developers can freely test and experiment with new and innovative ideas.

H2 and QUIC at callstats.io, our REST API, which is current in beta, is built atop HTTP/2, and we are excited to use QUIC as soon as it is stable and available for wide use.

Are you interested in developing and optimizing network algorithms? Join us!