First came virtualization, emulated computer systems, allowing us to run guest operating systems within a host operating system. In the last half a decade, another trend, with Docker in the forefront, has swept the software development circles and containers and container orchestration are now the watchwords.

Container orchestrators like Kubernetes are used to safely and swiftly transfer software projects from one environment to another. By containerizing - isolating - the software and its dependencies, operating system and infrastructure differences are factored out of the deployment and production process.

The recent HN debate on how over complicated container orchestration has become sparked an active discussion in our devops team of how and why we manage our deployments with Kubernetes.

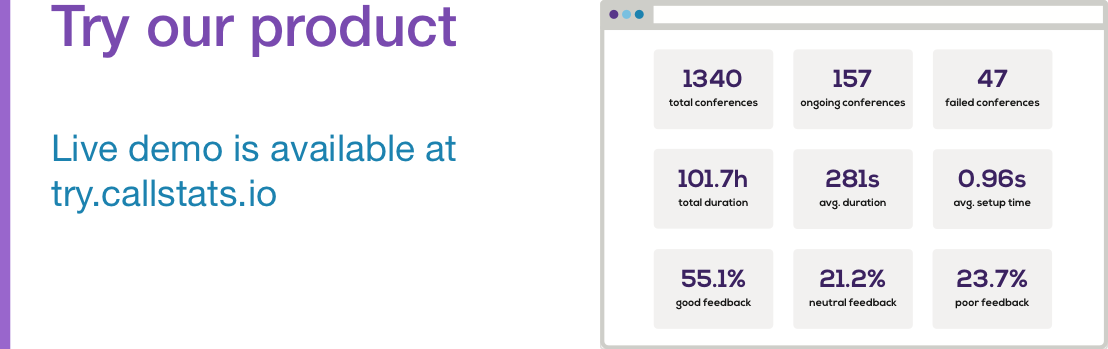

Unsurprisingly given its popularity, Callstats.io also uses Kubernetes to manage our clusters of containers. In this text, our devops engineer Eljas Alakulppi reflects on his experiences orchestrating our Kubernetes.

Kubernetes at callstats.io

Our brief history of deployment: Like many in those days, we started with completely manual deployments, followed by a script in which the runtime increased by 10 minutes for each new instance. And finally moved to our current Kubernetes model in fall 2016, and currently have up to 90% of our apps running on Kubernetes.

The main reason we use Kubernetes at callstats.io is simple: It lets us minimize the number of servers we run in our AWS ecosystem, naturally bringing about sizeable cost efficiencies. But we also make use of many features available in Kubernetes.

Our Kubernetes setup & developer-led deployments

Here’s how our devops team highlights the main benefits of our Kubernetes set-up:

The built-in service registration and discovery provided by Kubernetes is a breeze compare with other solutions such as etcd or zookeeper.

The ease of scaling up/down services with Kubernetes is something the team especially appreciates.

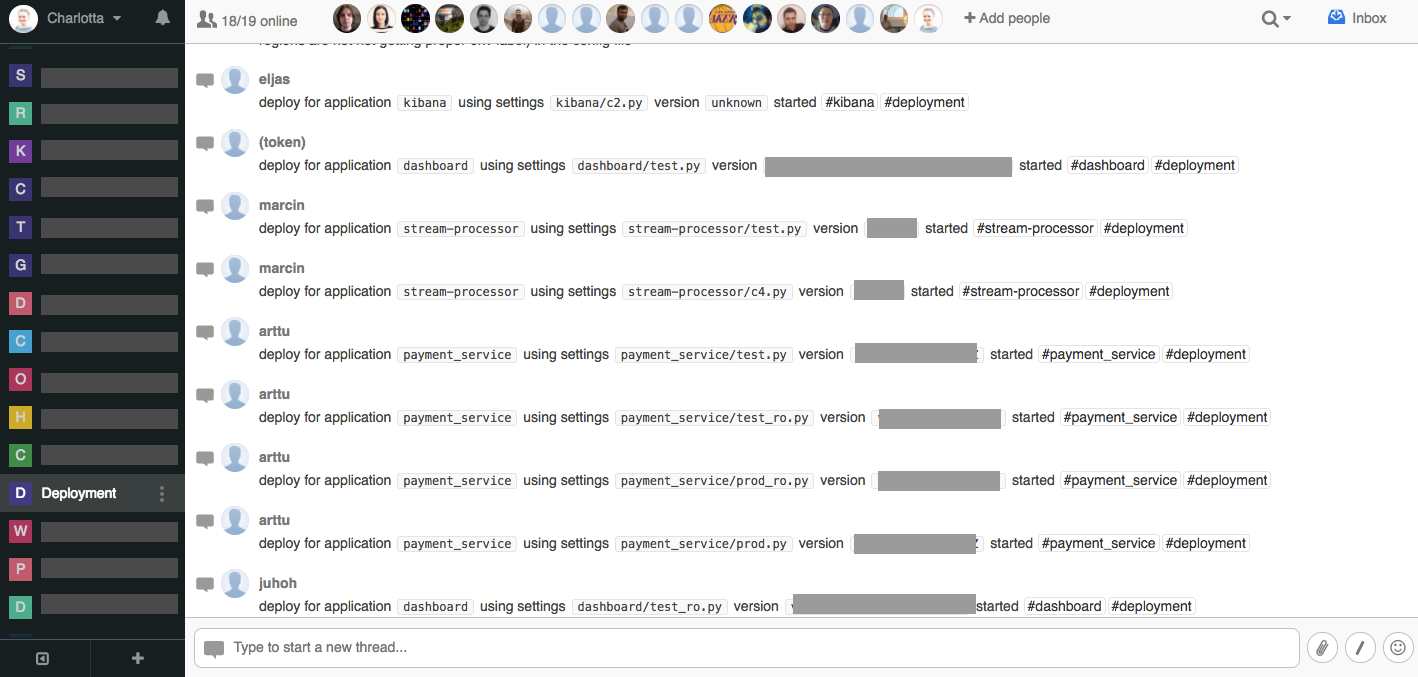

- Easy upgrade and rollbacks: Kubernetes provides nice mechanisms to manage the rolling out of service updates. We’ve got custom deployment scripts to create deployments with versioned configurations. This allows for relatively easy rollbacks, when needed. We also appreciate how, for example in a database migration, Kubernetes allows running separate jobs during deployment (& a part of the deployment script notifies our Flowdock flow so that the whole team can follow deployments)

-

Developer-led rollouts: Creating completely new deployment (YAML template + python script to supply environment specific data) is relatively simple and does not always require involvement from the devops side. At callstats.io, actual new version rollouts are regularly managed by our developers.

-

Using either nginx ingress controller or straight LoadBalancer (with proxy protocol enabled) for exposing services to outside world

-

Secrets for accessing Hashicorp Vault are created during the deployment for services which support it. There are some well known downsides to this setup, so we’re currently planning to switch to a Kubernetes based authentication.

Currently, initial Vault provisioning for new applications is done by our devops team, but moving on, the plan is to allow developers to configure most of apps by themselves.

Like most companies using Kubernetes, we don’t deploy Kubernetes directly using the service’ own provisioning tools. We briefly considered rolling out our own provisioning tool, but ended up opting for kops instead. Kops contained more or less everything that we wanted at that point, and what’s more, in the last 1.5 years, kops has added many new features that we’ve taken up as well. Like all software, kops is not perfect though: It seems that releases are lagging one version behind, for example. However, in our use, the convenience and its fast and easy start-up far outweigh the negatives.

Network configuration

Back in 2016 when we deployed our initial Kubernetes cluster, we had a few different options for inter-node communication. Out of these, we ended up choosing Calico, as we thought a BGP based network overlay would, in future, allow us to more easily connect Kubernetes “natively” with AWS.

This, however, proved to be quite a bit more challenging that we had anticipated: injecting routes one’s own routes in AWS isn’t exactly trivial. Furthermore, there is currently no direct connectivity from our VPC to Kubernetes pods, though Kubernetes pods can access services within our VPC.

Lately, we have been following more native methods to get network connectivity to pods, such as aws-vpc-cni-k8s, although we see some limitations that could be troublesome on smaller instance sizes.

If we had one wish, managing several Kubernetes clusters could be made more fault tolerant. Even with Federations, we need to still have a master cluster, and here we’d wish Kubernetes to up their failure-proofing.

Got similar experiences with Kubernetes? Any advise for our team’s orchestration efforts? How would you set up your container orchestration? Talk to Eljas and the rest of our devops team: devops(at)callstats.io