Quality of Experience (QoE) has somehow eluded a proper definition for many years. The current consensus of the scientific community is that QoE is:

the degree of delight or annoyance of the user of an application or service.

Furthermore:

It results from the fulfillment of his or her expectations with respect to the utility and/or enjoyment of the application or service in the light of the user’s personality and current state.

This definition came from the Qualinet COST Action, in the form of a white paper co-authored by over 70 experts in the field. It is currently the working definition for the ITU-T as well.

Understanding what QoE is

Put simply, QoE concerns itself with how users experience the services they use. Traditionally, most QoE research has focused on media services (e.g., telephony, video), but more recently, other types of services have also caught the interest of the research community, such as web-based services, cloud services, gaming, etc.

QoE is a very subjective concept, and as such, the only “truth” that can be gathered about it is the actual opinion of users. For a given service, we can collect ground truths by means of a subjective assessment, which basically involves a user panel (these people are separated into groups based on those who are untrained and trained to view and rate videos, with the majority being untrained) rating a service under a series of carefully crafted conditions. The methods to carry out these assessments have been well-studied, and many of them are standardized by the ITU-T (a canonical example is the P.800 recommendation for telephony systems). This is a tedious and expensive process, and most of the research work on QoE revolves around ways to avoid it.

Factors Influencing QoE

According to the ITU-T and the Qualinet white paper, factors influencing QoE:

Include the type and characteristics of the application or service, context of use, the user’s expectations with respect to the application or service and their fulfillment, the user’s cultural background, socio-economic issues, psychological profiles, emotional state, and other factors whose number will likely expand with further research.

In general, we can classify these factors into human factors, context factors, and system factors.

Human Factors

Human factors are inherent to the users of the service. They include the physiological, emotional, cultural, and socio-economic aspects of each user. In general, human factors are hard to capture, and their impact on QoE is equally hard to understand (though in some cases, human factors such as language have better-understood impacts on some aspects of QoE, such as listening quality for voice streams).

Context Factors

Context factors are related to the situational aspects of how the user actually uses the service. Context factors can further be classified into physical (e.g., location, mobility), temporal (e.g., frequency of use, time of the day, duration of use), economic (e.g., service price), and technical (e.g., type of device used, screen size).

System Factors

System factors are inherent to the service itself, and those characteristics of it that can have an impact on the quality experienced by the users. Examples of these characteristics in the case of WebRTC can be the network performance, the type of codec used, the video resolution, etc.

As you might have guessed, at callstats.io we are most interested in the system factors, which are amenable to monitoring, and which contribute the largest overall component to the users’ QoE. That is, the perceived quality of a WebRTC service. That being said, it should be noted that the other factor classes should not be ignored, as they can sometimes have a large impact on QoE.

Modeling QoE

Objective methods for assessing QoE aim to estimate the user ratings using other approaches, which often rely on comparison of the original media to the degraded media (full-reference methods), or of some characteristics thereof (reduced-reference methods). These comparisons most often consider models of the human visual or auditory systems, as well as well-known aspects of perception such as the Weber-Fechner law. A third type of objective methods are referred to as no-reference, and can either be signal-based or parametric.

In the context of monitoring applications, no-reference method is the type of model we often seek, as it allows us to estimate the perceived quality from measurable parameters. These parameters can be both at the network, media, and application level, as well as other contextual parameters that may be relevant (device type, location, etc.).

QoE and WebRTC

WebRTC presents unique challenges when it comes to understanding what QoE actually means in its context. The scientific literature offers a plethora of ways to understand e.g., voice quality, video quality, conversational quality. To different degrees, these are “solved problems”, and they all clearly play a part in how WebRTC users experience the services they use.

The challenges come when we need to consider all of them at the same time, in a group of several people, who are possibly accessing the service with heterogeneous devices, in different physical contexts. Moreover, the way in which these people interact can vary significantly from one WebRTC service to the next, and this in turn can affect how the underlying technical factors affect the QoE of the users.

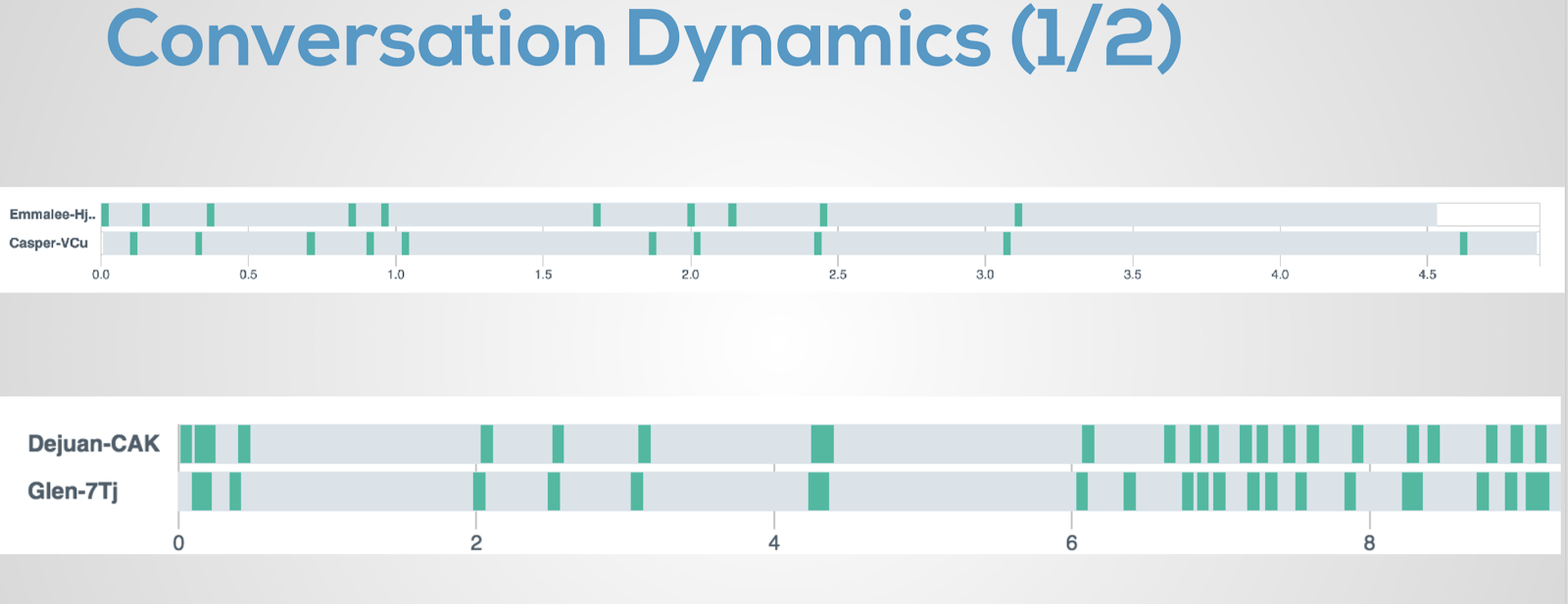

Figure 1: Conversational Dynamics: The impact of latency.

As a simple example, consider the following: it is well-known that network latency affects the conversational quality of a call. The higher the latency, the more difficult it becomes to interact with the other party (or parties). For WebRTC services, however, there are many different use cases for which the interaction aspects of the call vary wildly; think for instance of someone giving a lecture for a remote audience, as opposed to two colleagues having a heated argument about a project’s schedule. In the former case, the impact of latency on the overall QoE will be significantly lower than in the latter case, in which interaction needs to be much more fluid for the conversation to be properly carried out.

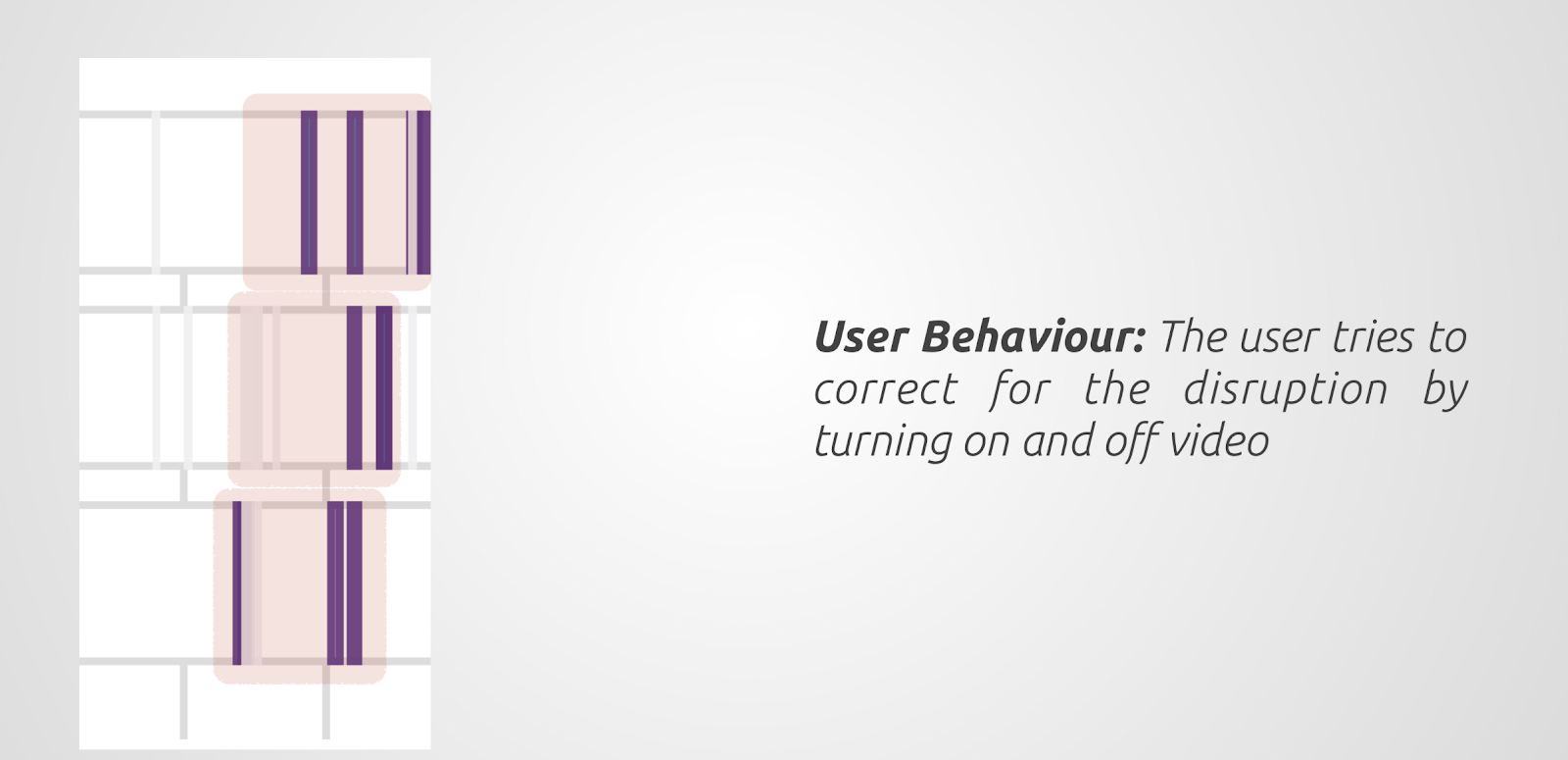

Figure 2: User Behavior: How the user attempts to correct for poor quality.

Unique QoE challenges of WebRTC

Without going into too much detail, we can list the main challenges of understanding and monitoring QoE for WebRTC services, based on their characteristics:

Multi-party

WebRTC services can (and often do) include multiple parties. The way that the overall QoE of the service is to be understood is significantly more complex than when there are only two people on a call, or a single user using e.g., a streaming service. The different participants will likely experience the service in different ways depending on various aspects such as their network connectivity, their role in the call (mostly listening, mostly speaking, etc.), the device they use to connect, etc.

Multi-modal

WebRTC services often include audio, video, screen sharing, and chat, all at the same time. While there is some understanding in the scientific literature on how audio and video interact from a QoE point of view for streaming services, the way in which different modalities can interact in a WebRTC service is much more complex. For example, audio/video sync is an issue when people are conversing with each other and less of an issue when doing screen sharing or during a presentation.

Multiple Use Cases

Some services (e.g., video streaming, VoIP) are used more or less in the same fashion all the time. WebRTC services can be much more varied in how they are used. This leads to different interaction modes, and different impacts for each modality used. All of this makes understanding and modeling QoE for WebRTC services very complicated.

QoE at callstats.io

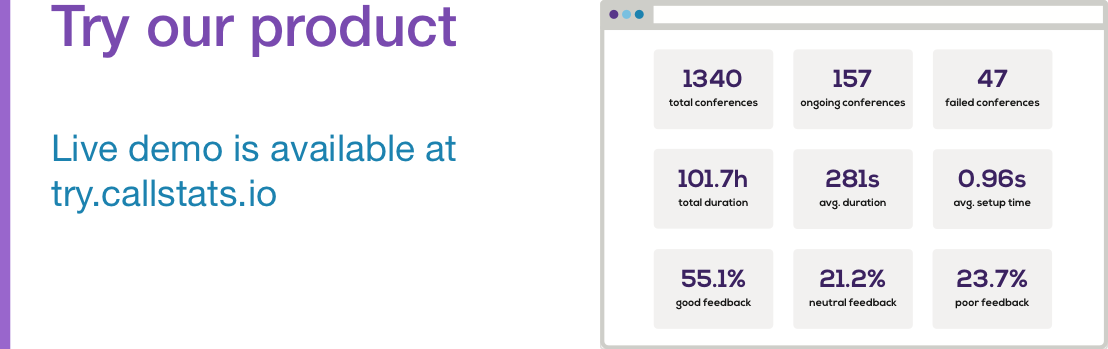

At callstats.io we aim to provide our customers with useful, actionable information about how well their WebRTC platforms are performing. Ideally, this means giving them a clear indication of what QoE they are delivering to their users.

As seen above, this is a complex problem to solve, and it is still very much an open one. Our approach to solving it is to start from simple, reliable measures, and combine them in ways that bring us closer to understanding how the end-users of each service experience the use of the service.

In continuing to develop this understanding, we will continue to add value to the metrics we provide, ensuring that they remain clear and informative (for understanding the business-level impact of the service’s performance and quality), but also actionable (for allowing engineers to continually improve the quality of the service they deliver).

Want to improve the quality of experience for audio and video calls in your application? Demo our dashboard today.