The WebRTC Working Group held an interim meeting last month from the 19th to the 20th of June to discuss the future of WebRTC. All browser vendors made promising remarks on completing WebRTC v1.0, which was further validated by the fact that WebRTC v1.0 was recently updated with bug fixes in June 2018. WebRTC v1.0 was updated with the following new APIs: RTCRtpSender.setStreams(), RTCRtpTransceiver.currentDirection, RTCSctpTransport.maxChannels, RTCPeerConnection.onstatsended, and the RTCStatsEvent interface.

In a previous article, we discussed the possible evolution of ICE after WebRTC v1.0. In this article, we are discussing the possible evolution of WebRTC as a whole.

Use Cases of WebRTC

Before we consider the evolution of the WebRTC API, we should consider use cases. When we built WebRTC v1.0 in 2011, we discussed several use cases and requirements. One of the things that has changed significantly since 2011 is the world of apps. We have introduced a fully immersive experience to the end-user through mobile apps, virtual reality, augmented reality, and other methods. We have also seen photos take a strong hold over the internet, and interactive sites become the new norm. Because of this, any change to the existing WebRTC API and its evolution should be motivated by new emerging use cases.

Unfortunately, some of these use cases cannot be properly implemented with the current WebRTC API. Therefore, API enhancements are needed. These enhancements fall into two, non-mutally exclusive categories: use case and developmental ease.

Unified Media and Data

Unified media and data were discussed at the meeting under broad categories, including:

- Synchronizing several media and data streams

- IoT device communication

- Live streaming

- Gaming, including VR/AR

For more information about emerging use cases in WebRTC, check out our report on The Future of WebRTC: Innovative Use Cases of Real-time Audio and Video Communication, made in collaboration with Disruptive Analysis.

Media Pipeline Control

Several motivating reasons were discussed at the meeting on gaining more control over the media pipeline, including:

Pluggable Congestion Control: There were several proponents of pluggable congestion control, including callstats.io. One of the major reason motivating it was using multiple paths. We have long-standing investments in this space, including work by several team members on multimedia congestion control and related optimizations. Varun and Joerg worked on Multipath RTP (MPRTP), see his presentation from 2013. Override of Browser-implemented Algorithms: Being able to override the browser-implemented algorithms would result in developers potentially bringing their own jitter buffer (which would be essential for multipath), FEC algorithm (e.g. LDPC, Raptor, etc), encoder and decoder (codec), etc.

Next Steps for WebRTC

At the meeting, Peter Thatcher from Google presented likely evolutions of WebRTC, and each proposal was debated with great excitement and enthusiasm. The rest of this article discusses these proposals.

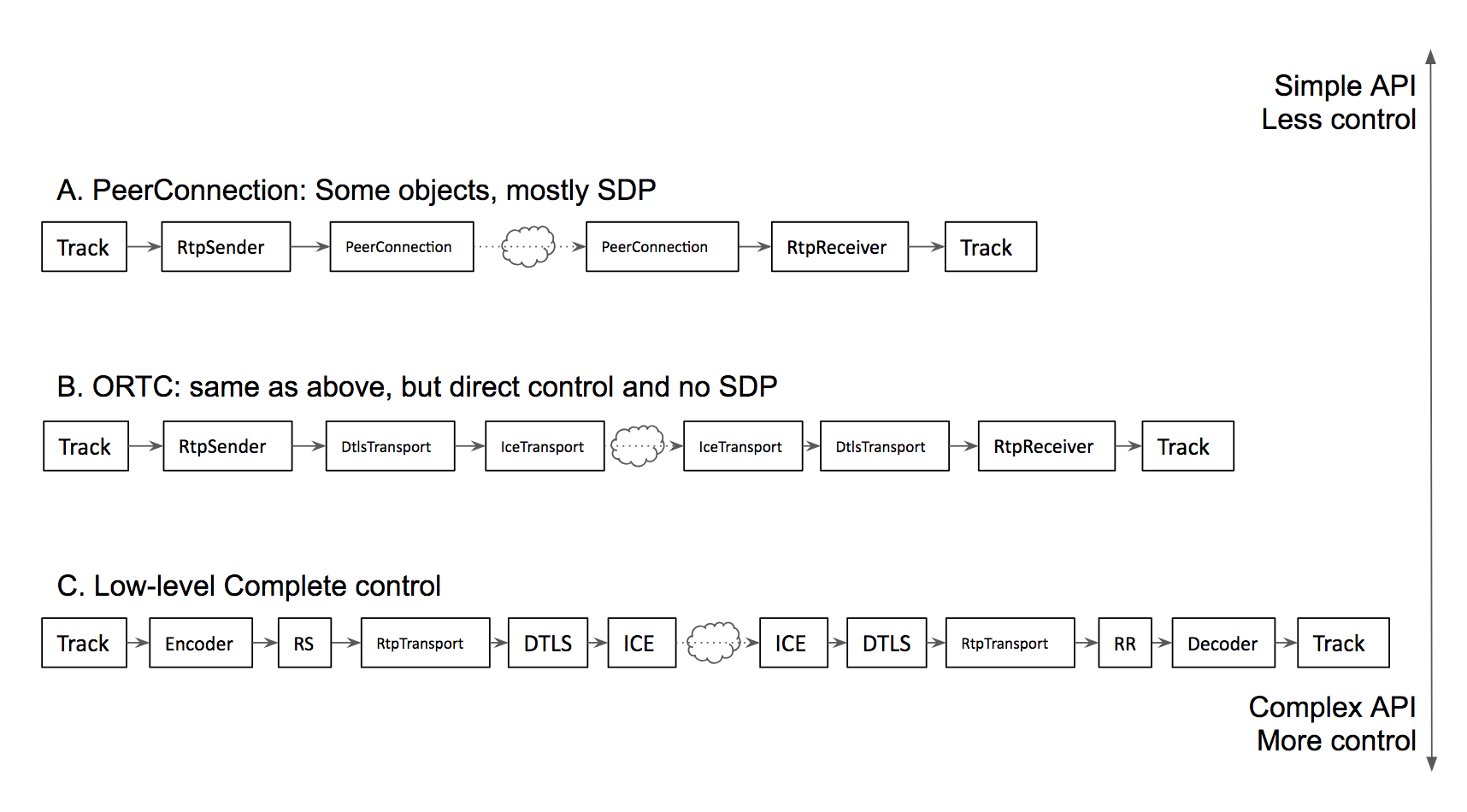

Please keep in mind, with each evolution of the API, the application developer gets more control over the real-time communication pipeline. This means that the API becomes more complex, and more responsibility and flexibility is placed on the app to correctly handle the signaling and, consequently, the capability negotiation between endpoints.

Generally, we at callstats.io believe that giving more control to the application developer will lead to better products. Firstly, the complexity of some of these protocols and algorithms can move away from the browser (as demonstrated by the plan wars). Secondly, web developers already know how to shim complexity and make it available to other developers. Ergo, standing on the shoulders of giants.

Figure 1: Evolution beyond WebRTC v1.0

ORTC

Object Real-time Communications (ORTC) has existed as a parallel effort to WebRTC over the past few years, and several objects that were first proposed in ORTC were added into WebRTC v1.0. ORTC does away with SDP as the control surface, and gives direct control over some objects that are currently not available in WebRTC v1.0. More objects means more direct control. For example, with ORTC, you are able to use and control both temporal and spatial Scalable Video Codecs.

Pluggable Transports

Taking the idea of splitting objects of the media pipeline further, pluggable transports provide more control over the media pipeline. For example, to add or remove metadata to the encoded/decoded frames, or for fine-grained control over the media quality.

In order to accomplish this, there would need to be more control over the media transport pipeline. This is possible by separating the encoder and decoder from the RTCRtpSender and RTCRtpReceiver, respectively. Further, this would allow media and data over different transports. For example: RTP over UDP or QUIC or SCTP. Apart from the pluggable transports, this would enable large-scale conferencing services to use different encryption keys for hop-by-hop encryption (via DTLS/SRTP) and end-to-end encryption (e.g., Double/Triple in PERC).

Raw Media and Complete Control

Providing complete control over the pipeline would allow the app to be in full control of the encoding and decoding (SVC, Simulcast, or which codec), media congestion control, security (any form of encryption), packetization of media frames (FEC, RTX, etc), synchronization of media tracks at the decoding endpoint, and more. Consequently, this flexibility requires that the application to do more, but developers can opt out if they would prefer. This is assuming the browsers implement or allow for the bring-your-own model, where custom app components would override default browser behavior.

Summary

The evolution comprises of two aspects:

- Create more objects for components in the real-time communications pipeline for audio, video, and data.

- Give access to raw media.

There are several concerns regarding this evolution:

A. Encrypted or unencrypted raw media. B. It could already be done today. C. JavaScript is not timely.

With regards to security, for the sake of argument, we can assume that it is encrypted raw media and that the app has no access to unencrypted media.

With regards to “it could already be done today”, we already have WebAudio. WebAudio gives a lot of control over audio capturing and rendering. For video, things are a bit more convoluted, however, it can still be done. For example, by using the rendering and capturing the Canvas from MediaStreams. If you are interested in reading a follow up blog post on this, leave us a comment or ping us @callstatsio on Twitter.

With regards to Javascript not being timely, that is indeed true, if the complete pipeline is managed in the main thread, this would be 1 frame per second or lower! For this to work, we would need a slew of new JavaScript and browser features – WebWorkers, WebAssembly (wasm). Apart from that, the web should be able to access the media stream and the app should be able to predictably anticipate that the tasks get scheduled and completed performantly, amongst other JavaScript quirks.

We look forward to the upcoming TPAC in Lyon (October 2018), where these proposals will be discussed in more detail based on the input from the contributions in the intervening period.

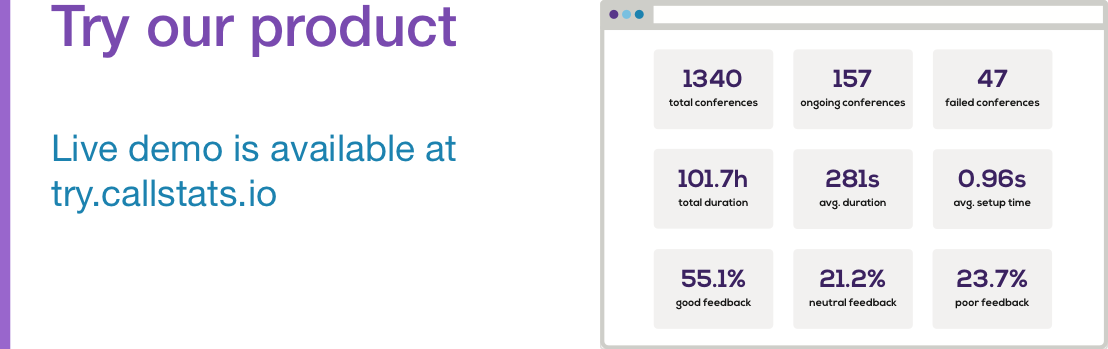

Want to learn more about WebRTC? Check out our latest WebRTC industry report, with metrics from our WebRTC analytics service.