At callstats.io, we have been working on evaluating media quality for the last 12 years. This work was part of my research on congestion control and later became a product for callstats.io. We began evaluating media quality for multimedia congestion control to improve the performance of bandwidth estimation algorithms. Typically, greater bandwidth suggests the encoder can allocate more bits to the quality (Quantization Parameter) or increase the frame-rate or frame-width.

One of the central tenets we incorporate when building congestion algorithms is that changing media characteristics by too much in a very short period of time results in a bad quality of experience. This is slightly different from ITU-T Study Group 12’s (SG12) definition of Quality of Experience (QoE), namely, the degree of delight or annoyance of the user of an application or service.

QoE assessment is the process of measuring or estimating the user’s quality rating of the service or application. As per SG12, a QoE assessment is considered strong when it includes many of the specific factors. For example, a strong assessment will include a majority of the known factors. Consequently, a weak QoE assessment will include only one or a small number of factors.

Live or stored media is easier to assess because the received video can be evaluated against the original based on specific metrics. The major metrics impacting QoE include initial buffering duration, number of rebuffering events (frequency), and the durations of the rebuffering (magnitude). Consequently, QoE assessment for real-time communication is intrinsically hard and complex, because the list of possible influencing factors are extensive and extremely variable depending on the circumstances. For example, if your phone is almost dead, you may prefer to use low-quality video and be happy with the media quality despite it being low because it saves battery life.

How We Measure Annoyance

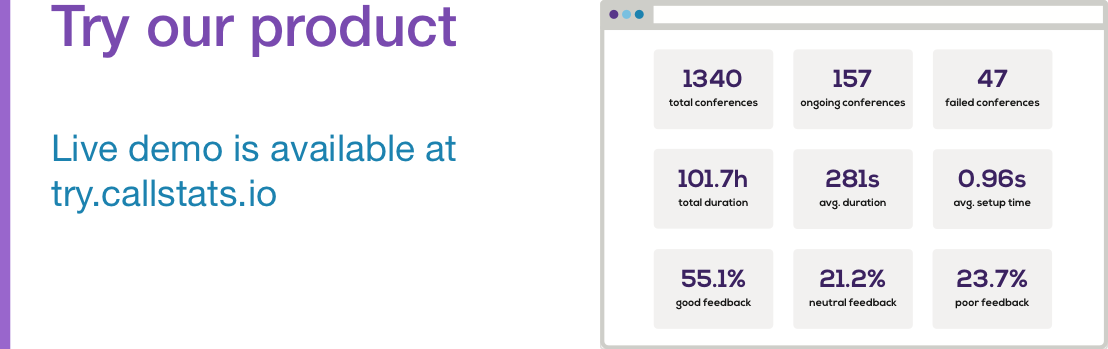

This finally brings us to how we are tackling the issue of measuring media quality at callstats.io. Measuring media quality is heavily influenced by pre-call tests, call setup, and call experience.

1. Pre-call Test (Before the Call)

The pre-call tests run in the background and perform active measurement. See our latest blog-post describing pre-call tests at callstats.io. Pre-call tests evaluate the network performance before the call is initiated. They help applications indicate the quality before the call to set appropriate expectations for the end-user.

2. Call Setup (Initially)

Similar to video streaming, long setup times to initiate a call are frustrating, especially if the end-user does not know why it is taking so long to set up the call. For example, by measuring the connectivity setup or time to first media.

3. Call Experience (Within the Call)

Call experience is arguably the most interesting part of measuring annoyance, as there are several elements to measuring quality.

- User Presence - In some apps or services, the call duration is an important metric to measure engagement. It can be especially useful to measure against user expectations. Additionally, it is significant to note whether a user rejoins a session. If they rejoin, why?

- Media Disruptions - Media disruptions can be annoying, especially when playback is not smooth. If media freezes and unfreezes or media quality fluctuates at short time intervals, some users will leave entirely. Alternatively, multiple types of discordant media can be equally frustrating. For example, audio and video that is slightly out of sync can irritate users.

- Conversational and Layout Dynamics - The simplest assumption that can be made is that at any given time, the active speaker’s quality should have the highest impact on the quality score. However, in large-scale conferencing, aggregating media quality is complex because the score needs to take into account various factors, such as who the active speaker is, and who each participant is actually viewing.

See our latest blog post on our ongoing work on Objective Quality.

Various system and WebRTC APIs assist in measuring the above metrics. Modeling the variation of these events and metrics over time provides insights into the degradation and improvement in media quality.

Correlating user annoyance is difficult, but we are able to achieve this by measuring end user interactivity during a call. For example, we monitor if any of the observations during the call cause the end user to mute or pause the media stream. This is an indirect way to correlate user behavior with poor quality of experience. Additionally, our user feedback API directly captures end user feedback and provides the ground truth for improving our quality models.

You can read more about our congestion control work in my Ph.D thesis, Marcin and Balazs’ work on FEC-based congestion control, and our contributions to the IETF’s RMCAT WG.